The Hidden Struggle: How a Mental Health SaaS Could Revolutionize Depression Support

Depression is often misunderstood as mere sadness, but for many, it's a silent battle against numbness, exhaustion, and emotional suppression. The strongest individuals—those with immense willpower—often find themselves most vulnerable, as they internalize their struggles. This article explores how a specialized SaaS platform could provide the tools and community needed to break this cycle, offering a lifeline to those who feel isolated in their pain.

The Problem: Silent Suffering and Misunderstood Emotions

Depression manifests uniquely in each individual, but common threads emerge: emotional numbness, self-isolation, and a relentless internalization of pain. Many sufferers describe feeling 'stuck'—unable to articulate their emotions or access effective support. Comments like 'I don’t know how I feel' or 'I can't talk to anybody, they struggle more than me' highlight the crippling self-doubt and loneliness that accompany this condition. Traditional support systems often fail these individuals, dismissing their pain with platitudes like 'others have it worse.'

Idea of SaaS: A Personalized Mental Health Ecosystem

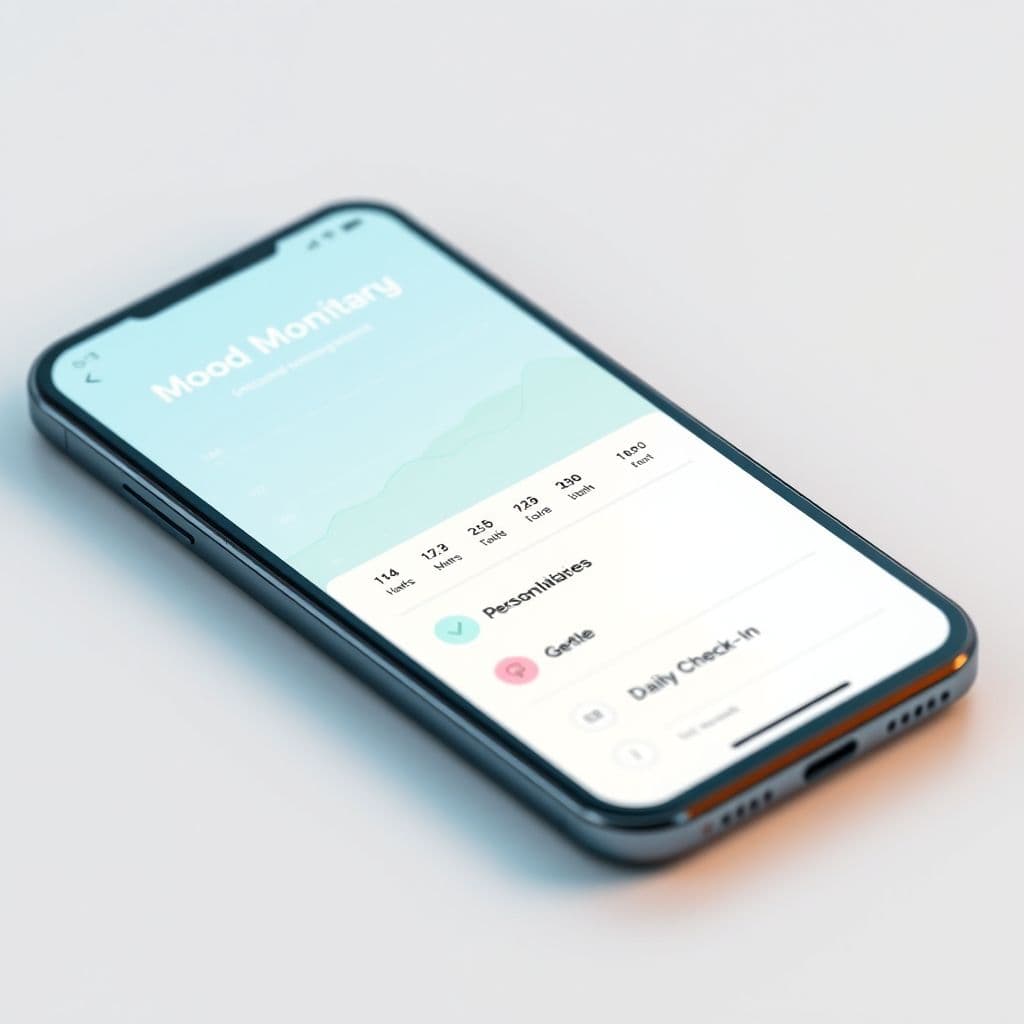

Imagine a platform that combines AI-driven emotional assessments with human expertise. Users could start with anonymous, adaptive questionnaires that help them identify patterns in their mood and behavior—addressing the common cry, 'I wish I knew what is wrong with me.' The system would then guide them toward tailored resources: licensed therapists (available via secure video or chat), evidence-based coping strategies, or peer support groups for those who relate to comments like 'It becomes like a companion you fear losing.'

Key features might include emotion-tracking journals with smart prompts ('What is this feeling trying to tell me?'), crisis intervention tools, and educational content debunking myths (e.g., 'Only weak people get depressed'). A community forum could foster connection while maintaining anonymity—vital for users who worry, 'How can I repost this without my friends finding out?'

Potential Use Cases

1. The Overwhelmed Student: After failing exams due to brain fog ('Depression made me fail in my studies'), they use the platform’s focus-boosting exercises and connect with a counselor specializing in academic pressure. 2. The Silent Sufferer: Someone who 'doesn’t ask for help' begins with anonymous forum interactions, gradually transitioning to teletherapy. 3. The Confused Individual: A user questioning, 'Is it depression or something else?' takes a guided assessment, receiving a preliminary analysis and recommended next steps.

Conclusion

Depression thrives in isolation, but technology has the power to bridge gaps in understanding and access. While this SaaS concept remains hypothetical, its potential to democratize mental health support is undeniable. By meeting users where they are—whether in confusion, numbness, or quiet despair—such a platform could rewrite the narrative of suffering alone.

Frequently Asked Questions

- How would this SaaS protect user privacy?

- The hypothetical platform would use end-to-end encryption for therapy sessions, allow pseudonymous forum participation, and comply with HIPAA/GDPR. Users could control data sharing granularly.

- Isn't this just like existing therapy apps?

- While apps like BetterHelp focus on therapy, this concept integrates assessment tools, community support, and educational resources into a unified ecosystem—addressing the full spectrum of needs expressed in the TikTok comments.

- Could AI really understand human emotions?

- The AI component wouldn't replace human judgment but could flag patterns (e.g., sleep disturbances correlating with low mood) and suggest resources, always with options to connect to live professionals.