AI Therapy vs Human Therapists: The Emotional Impact and a Hybrid SaaS Solution

The rise of AI-driven therapy tools like ChatGPT has sparked intense debates. Users report profound emotional experiences, from deep self-realizations to tears, raising questions about the role of AI in mental health. But can AI truly replace human therapists? This article explores the emotional impact of AI therapy and proposes a hybrid SaaS solution that bridges the gap between AI and human care.

The Problem: Emotional Impact and Reliability Concerns

The emotional reactions to AI therapy are undeniable. Users describe experiences where AI tools like ChatGPT provided brutally honest analyses of their personalities, traumas, and even karmic debts from past lives. These interactions often leave users in tears, with a newfound clarity about their emotional states. However, this raises significant concerns. Can an AI, no matter how advanced, truly understand the nuances of human emotion? The lack of empathy, potential biases in responses, and the absence of a human connection are critical drawbacks. Users also worry about the reliability of AI-generated diagnoses and the ethical implications of relying on machines for mental health support.

Idea of SaaS: A Hybrid Therapy Platform

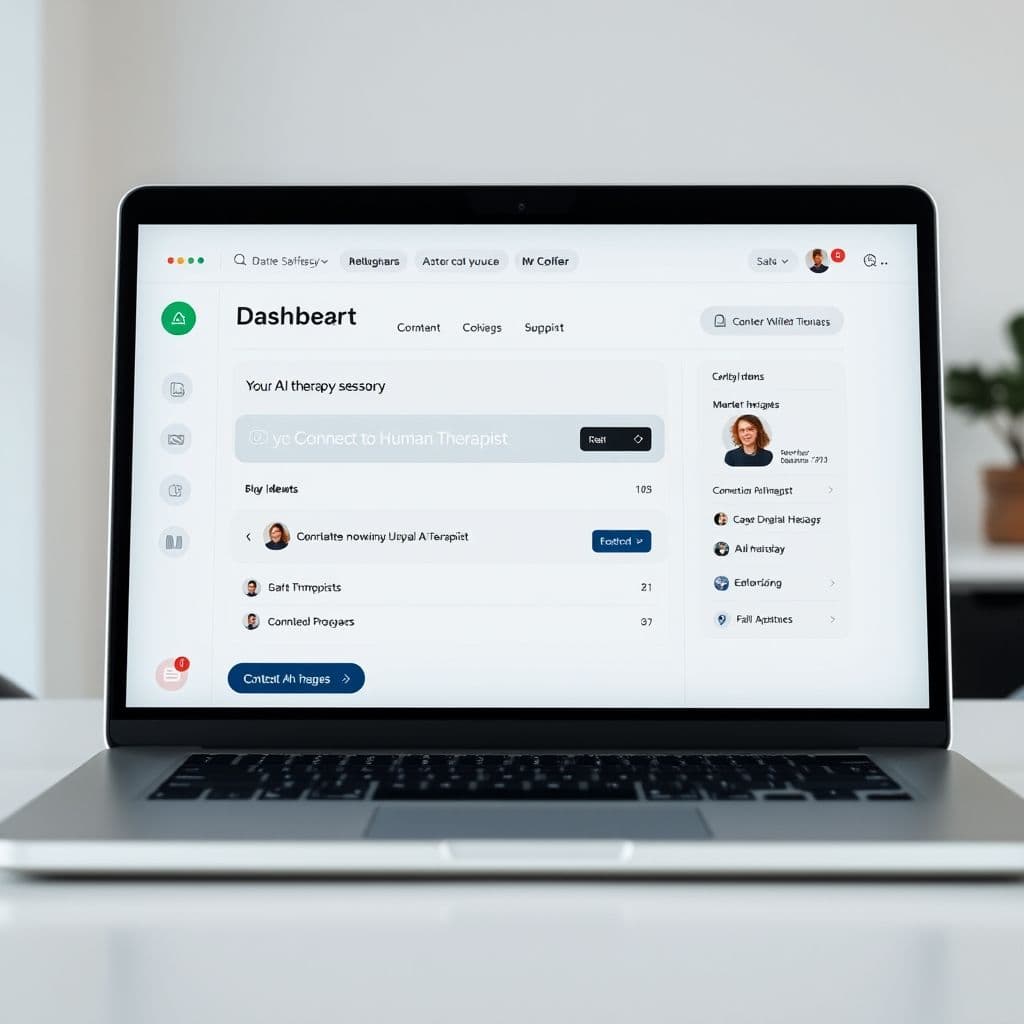

Imagine a SaaS platform that combines the best of both worlds: AI-driven insights and human therapist support. This hybrid model would use advanced AI to provide initial assessments, personalized prompts, and emotional support, while seamlessly integrating options to escalate to a licensed human therapist when needed. The AI component could handle routine check-ins, mood tracking, and basic therapeutic exercises, freeing up human therapists to focus on complex cases and deeper emotional work.

Key features of this platform could include real-time mood analysis, personalized therapy prompts, and a secure chat interface for human therapist interactions. The AI would learn from user interactions to provide increasingly accurate support, while human therapists would oversee the process to ensure ethical and effective care. This model could democratize mental health care, making it more accessible and affordable without sacrificing the human touch.

Potential Use Cases

This hybrid platform could serve a wide range of users. Individuals in remote areas with limited access to mental health care could benefit from AI support with occasional human check-ins. Busy professionals might use the AI for daily stress management while reserving human sessions for deeper issues. Therapists could use the platform to extend their reach, handling more clients efficiently by offloading routine tasks to the AI. The possibilities are vast, and the impact on mental health care could be transformative.

Conclusion

The emotional power of AI therapy is undeniable, but it comes with significant limitations. A hybrid SaaS platform that combines AI efficiency with human empathy could offer the best of both worlds, providing scalable, affordable, and emotionally resonant mental health care. As AI continues to evolve, the integration of human oversight will be crucial to ensure ethical and effective therapy.

Frequently Asked Questions

- How viable is developing a hybrid AI-human therapy SaaS platform?

- Developing such a platform is technically feasible with current AI and telehealth technologies. The main challenges would be ensuring data privacy, integrating with licensed therapists, and maintaining ethical standards. A phased rollout starting with basic AI support and gradually adding human integration could mitigate risks.

- Can AI therapy replace human therapists entirely?

- While AI can provide valuable support, it lacks the empathy, intuition, and ethical judgment of human therapists. A hybrid model leverages AI for scalability and efficiency while retaining human oversight for complex and sensitive issues.

- What are the ethical concerns with AI therapy?

- Key concerns include data privacy, the potential for biased or inaccurate responses, and the risk of users becoming overly reliant on AI without seeking necessary human intervention. A hybrid platform would need strict protocols to address these issues.