AI vs. Human Therapists: The Ethical Dilemma and a Balanced SaaS Solution

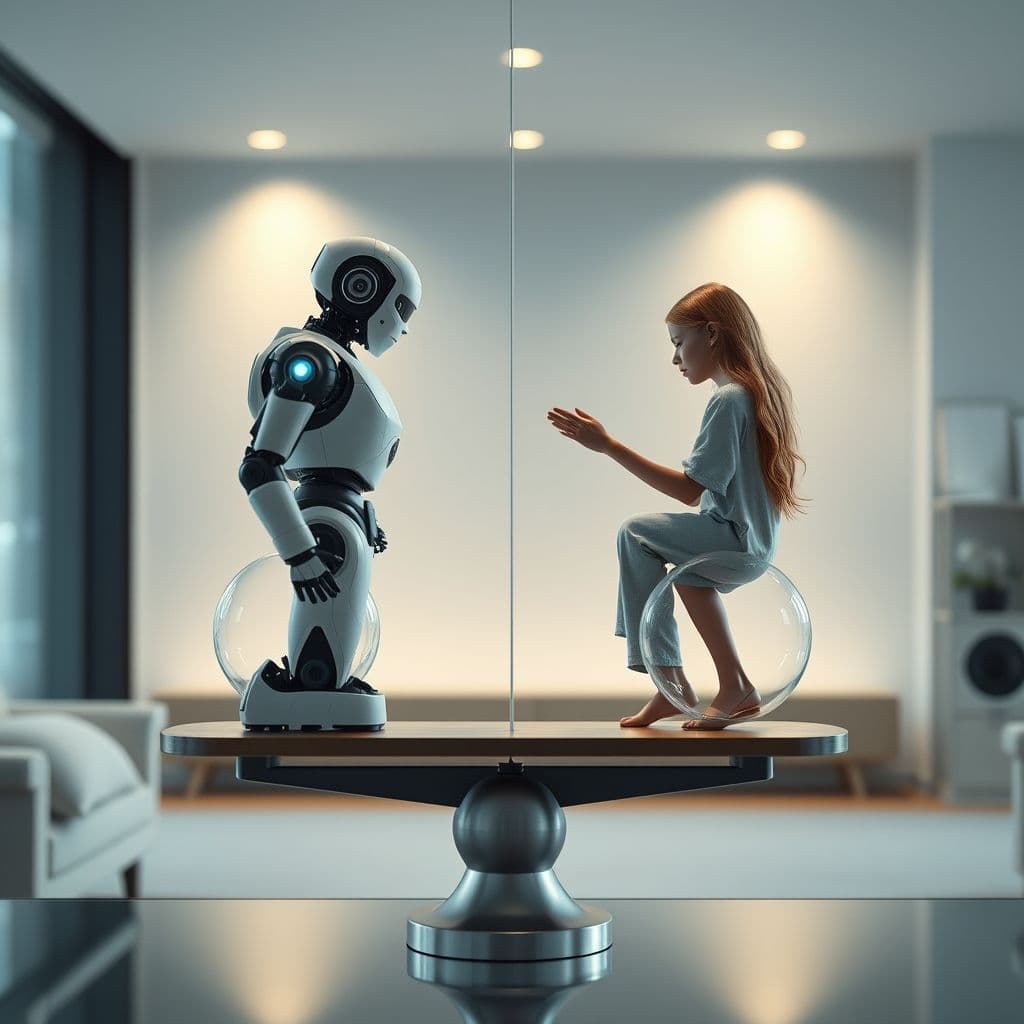

The rise of AI tools like ChatGPT in mental health has sparked a heated debate. Can AI truly replace human therapists, or does it risk offering superficial solutions to deep psychological issues? This article explores the concerns and proposes a balanced SaaS solution that marries AI efficiency with human empathy.

The Problem: Superficial AI Solutions in Mental Health

The viral TikTok video showcasing a ChatGPT prompt for self-reflection highlights a growing trend: people turning to AI for mental health support. While some users report profound insights, others raise valid concerns. Comments like 'Therapy has its place because AI does not know truth from fiction' and 'This sounds so dangerous' underscore the limitations of AI in understanding human emotions and trauma.

The core issue lies in AI's inability to provide the sacred co-regulation and embodied presence that human therapists offer. AI can mimic empathy and generate reflective questions, but it lacks the lived experience and nuanced understanding of human psychology. This gap raises ethical questions about the potential harm of relying solely on AI for mental health support.

Idea of SaaS: A Hybrid Mental Health Platform

Imagine a specialized SaaS platform that intelligently combines AI tools with human therapist oversight. This solution could offer users immediate AI-driven reflective exercises and journaling prompts (like the viral TikTok example) while seamlessly connecting them with licensed therapists for deeper issues. The AI component would handle initial assessments and routine check-ins, flagging critical cases for human intervention.

Key features might include: AI-powered mood tracking and journaling, therapist-approved reflection prompts, emergency escalation protocols, and scheduled video sessions with human professionals. The platform could use machine learning to identify patterns in user responses, helping therapists personalize their care more effectively.

Potential Use Cases and Benefits

1. For individuals: Users could benefit from 24/7 AI support between therapy sessions, with the assurance that serious concerns will reach a human professional. The platform could make mental health care more accessible and affordable while maintaining quality.

2. For therapists: Professionals could leverage AI to handle routine check-ins and preliminary assessments, allowing them to focus their expertise on cases that truly need human intervention. The platform could provide therapists with valuable insights from continuous AI monitoring of their clients.

3. For organizations: Companies and universities could offer this as a mental health benefit, ensuring their members have access to both immediate AI support and professional care when needed.

Conclusion

While AI like ChatGPT shows promise in mental health support, it cannot replace the depth of human therapeutic relationships. A hybrid SaaS platform that combines the scalability of AI with the empathy of human professionals might offer the best of both worlds - making mental health support more accessible without compromising on quality. Such a solution could address the ethical concerns while harnessing technology's potential to help more people.

Frequently Asked Questions

- How would this SaaS platform ensure user privacy and data security?

- The hypothetical platform would need robust encryption, strict data governance policies, and compliance with healthcare regulations like HIPAA. User data would only be shared with human therapists with explicit consent, and AI processing could potentially happen on-device for sensitive information.

- Wouldn't this still make therapy more impersonal?

- The goal would be to use AI for routine interactions and data collection, preserving the most valuable therapist time for meaningful human connection. The AI component could actually help therapists personalize their approach by providing deeper insights into client patterns between sessions.

- How viable is it to develop such a platform?

- Technologically, the components exist - AI chatbots, telemedicine platforms, and mental health apps are all mature technologies. The main challenges would be ensuring clinical validity, navigating healthcare regulations, and creating seamless workflows between AI and human providers. A minimum viable product could start with basic AI journaling and therapist messaging features.