AI-Generated Tests: The Hidden Pitfalls and a Potential SaaS Solution

AI-generated tests promise to save developers time, but many are finding that these automated solutions often create more problems than they solve. From tests that always pass to those that are outright misleading, the current state of AI in testing leaves much to be desired. In this article, we'll explore the root causes of these issues and propose a hypothetical SaaS solution that could revolutionize how developers approach automated testing.

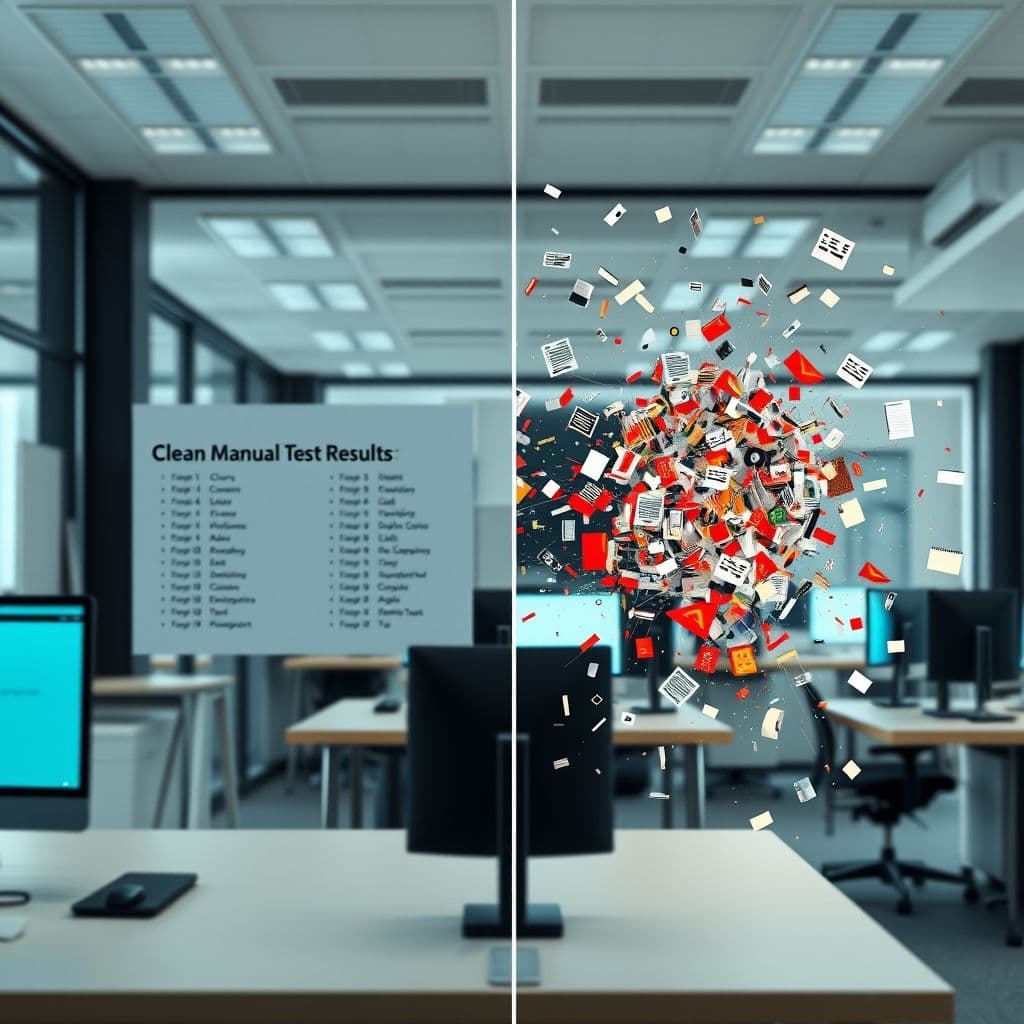

The Problem: Why AI-Generated Tests Often Fail

The fundamental issue with current AI testing tools is their inability to truly understand developer intent. As several developers have reported, these tools often generate tests that assume the code is correct, leading to false positives. One commenter noted, 'The AI just writes tests that always pass because it assumes your code is correct.' This creates a dangerous situation where developers might think their code is properly tested when it's actually not.

Another major pain point is the quality of generated tests. Many developers report spending more time fixing AI-generated tests than it would have taken to write them from scratch. Comments like 'a lot of tests turned out to be a lie' and 'half of them were nonsense and 80% failed' highlight the severity of the problem. Particularly in UI testing, where context and user flows are crucial, current AI solutions fall short.

A Potential SaaS Solution: Context-Aware Test Generation

Imagine a SaaS platform that goes beyond simple test generation by incorporating deep context analysis of both the codebase and developer intent. This hypothetical solution would analyze commit messages, PR descriptions, and even developer comments to better understand what the code is meant to accomplish. By building a more comprehensive picture of the development context, the system could generate tests that actually verify the intended functionality rather than just confirming the code executes.

Key features might include: intelligent test case prioritization based on code changes, integration with project documentation to understand requirements, and the ability to learn from previous test failures to improve future suggestions. The platform could also provide 'test confidence scores' to help developers assess the reliability of generated tests before committing them.

Potential Benefits and Use Cases

For development teams, this solution could dramatically reduce the time spent fixing faulty tests while actually improving test coverage. Junior developers could benefit from AI-generated tests that serve as learning tools, showing them what good tests for their code should look like. In CI/CD pipelines, more reliable automated tests could catch real issues earlier without creating false alarms that slow down deployment.

The system could be particularly valuable in legacy codebases where documentation is sparse. By analyzing patterns across the entire codebase, it could suggest tests that capture the implicit knowledge embedded in the code structure itself. For teams practicing test-driven development, it could help bridge the gap between initial specifications and executable tests.

Conclusion

While current AI testing tools often disappoint, the potential for a more sophisticated, context-aware solution is clear. By addressing the root causes of today's failures - primarily the lack of understanding of developer intent - a well-designed SaaS platform could transform automated testing from a time sink into a genuine productivity booster. The key will be balancing automation with the nuanced understanding that still requires human insight.

Frequently Asked Questions

- How would this SaaS solution differ from existing AI testing tools?

- Unlike current tools that focus solely on code analysis, this hypothetical solution would incorporate multiple context sources - commit messages, PR discussions, documentation - to better understand developer intent and generate more accurate tests.

- Would this completely replace manual test writing?

- No. The goal would be to handle routine test cases while flagging complex scenarios that might require human attention, effectively augmenting rather than replacing developer work.

- How could this solution handle UI testing challenges?

- By analyzing user flows and design documentation, it could generate more robust UI tests that account for actual user behavior patterns rather than just checking element existence.