AI Content Authenticity Crisis: How a SaaS Solution Could Restore Trust

The rise of AI-generated deepfakes has created a crisis of authenticity across digital platforms. From job interviews to romantic relationships, hyper-realistic synthetic content is undermining trust at an alarming rate. This article explores the growing problem and proposes a potential SaaS solution that could help verify content authenticity in an increasingly synthetic world.

The Deepfake Epidemic: A Crisis of Digital Trust

Recent studies show a 900% increase in AI-related scams in just two years, with half of businesses experiencing deepfake fraud attempts. The problem extends far beyond corporate security - romance scams, elder fraud, and identity theft are all being supercharged by AI's ability to create convincing synthetic media. What makes this particularly alarming is how accessible these tools have become; creating a believable deepfake now takes less than 10 minutes using publicly available AI tools.

The Core Problem: No Universal Verification Standard

Current attempts to identify synthetic content rely on either human intuition (noticing odd mouth movements or hand anomalies) or proprietary detection systems used by security firms. There's no universal standard for labeling AI-generated content, leaving consumers vulnerable and platforms struggling to moderate content. User comments reveal widespread demand for mandatory AI labeling and better verification tools, highlighting a clear market need.

SaaS Solution: AI Content Authentication Platform

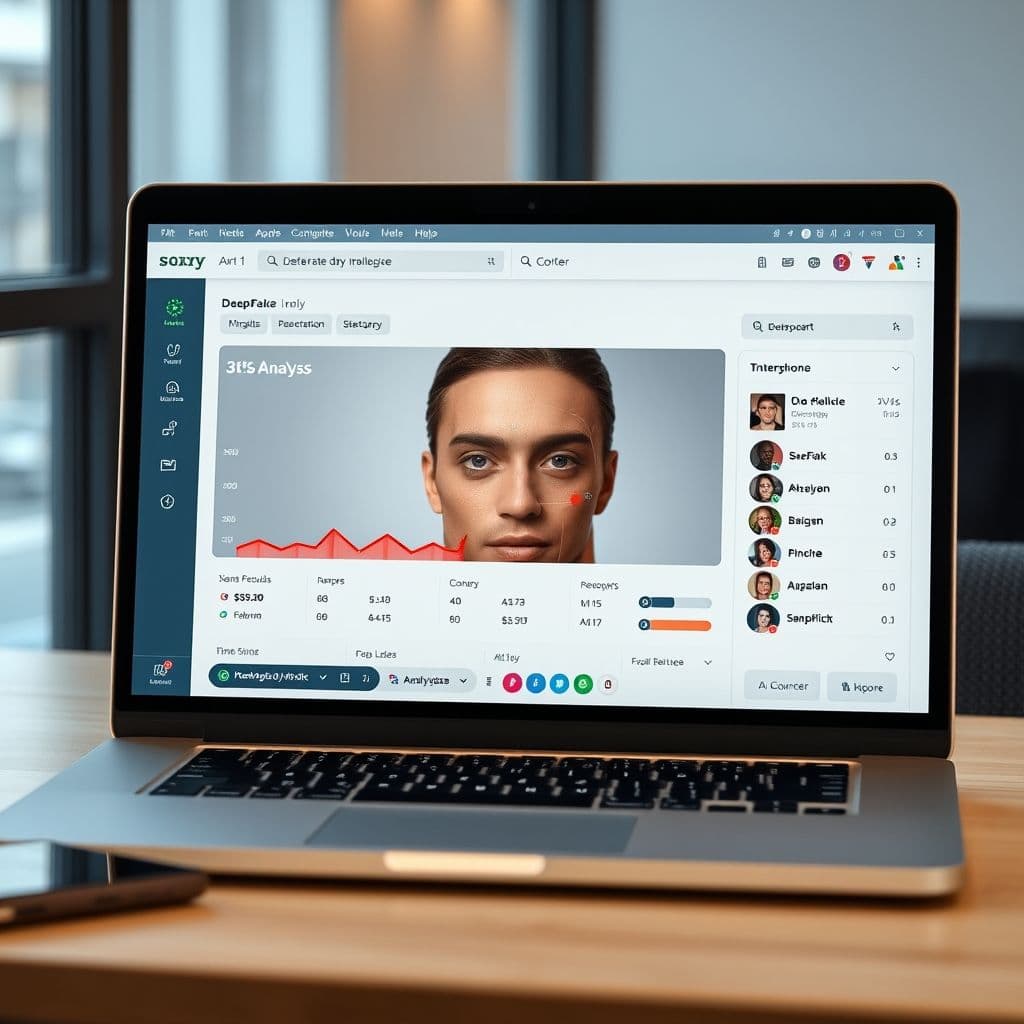

A potential SaaS solution could combine multiple verification methods into a unified platform. This hypothetical service might offer: real-time deepfake detection, blockchain-based content certification, and standardized authenticity labeling. The system could analyze videos, images, and audio files for synthetic artifacts while maintaining a tamper-proof record of verification results.

Key features might include browser extensions for real-time verification, API integration for platforms, and customizable authentication certificates. The service could use a combination of forensic analysis, behavioral biometrics, and cryptographic watermarking to provide multi-layered verification.

Potential Use Cases and Market Opportunities

Such a platform could serve multiple markets: dating apps verifying profile videos, HR departments screening job applicants, news organizations authenticating user-generated content, and social media platforms moderating synthetic media. The service could offer tiered pricing based on verification volume and integration depth, creating multiple revenue streams while addressing a critical digital trust issue.

Conclusion

As AI-generated content becomes indistinguishable from reality, the need for reliable verification tools grows more urgent. While technical challenges remain in detecting increasingly sophisticated deepfakes, a comprehensive SaaS solution could provide much-needed infrastructure for restoring digital trust. The market opportunity is clear, and the societal need is pressing - the question is who will develop this crucial verification layer for the AI age.

Frequently Asked Questions

- How accurate could an AI content verification SaaS realistically be?

- While no solution can be 100% accurate, a multi-method approach combining forensic analysis, behavioral patterns, and cryptographic verification could achieve high reliability. The system would need continuous updates to keep pace with advancing deepfake technology.

- What would prevent bad actors from faking the verification certificates?

- A robust system would use blockchain technology or similar tamper-proof methods to ensure verification credentials can't be forged. Each certificate would include cryptographic proof of the verification process and results.

- How could this SaaS solution integrate with existing platforms?

- The service could offer API integrations for social media platforms, browser extensions for individual users, and SDKs for app developers. This would allow verification at multiple points in the content lifecycle.